The UK's Online Safety Act 2023 (OSA) introduces various obligations on in-scope user-to-user services in relation to online illegal harms (among other things). Much of the detail around compliance is now contained in Ofcom's regulatory documents and guidance. In particular, Ofcom's Risk Assessment Guidance and the Illegal Content Codes of Practice for user-to-user services were published on 16 December 2024 as part of the Illegal Harms Statement. We highlight the most significant changes since Ofcom's 2023 consultation that have an immediate impact on what in-scope user-to-user service providers need to do right now.

Illegal Content Risk Assessment Guidance

This guidance sets out what services need to do to comply with their illegal content risk assessment duties. The key differences between the 2023 consultation version and the 2024 statement version cover:

Priority illegal content

To begin with, the grouping and number of priority illegal harms has changed: there are now 17 kinds of priority illegal content, as opposed to 15 (see Table 1 below and on page 9 and Table 15 from Appendix A on pages 72-78 here). Ofcom separated Human trafficking and Unlawful immigration into separate kinds of priority illegal content following stakeholder feedback and added animal cruelty as a new kind of priority illegal content. As part of their risk assessments, services will have to separately assess and individually assign an overall risk level to each of the 17 kinds of priority illegal content, and identify whether there is a risk of other illegal content being encountered on the service, including non-priority illegal content (and if so, assign one shared/collective risk level for the other illegal content).

Source: Ofcom

Assessment framework

This stayed largely the same: services will still have to follow the 4-step risk assessment framework to do a 'suitable and sufficient' risk assessment that is specific to the service and reflects the risks accurately. Ofcom thinks the 4-step framework is flexible and can align with existing processes and approaches. But Ofcom has now made various clarifications in terms of what it expects from services at each step (for example, Ofcom adjusted Step 2 to explain how service providers need to consider existing controls as part of determining a risk level for each kind of priority harm) and gives clear directions as to what 'essential records' are needed for each assessment step.

Register of Risk and Risk Profiles

The Register of Risk was updated based on extensive stakeholder responses following the consultation. It now includes over 1,000 individual sources, over 130 grouped priority offences and has new chapters. Ofcom has also updated the Risk Profiles, mostly adding new associations between kinds of illegal harm and certain risk factors. You can find all of the changes to the key kinds of illegal harms associated with specific risk factors in the user-to-user Risk Profiles on pages 461-464 here.

Risk Tables

Ofcom updated its four Risk Level Tables (see pages 58-68 here). For example, Ofcom has given more emphasis to evidence of actual harm (as the tables are not meant to capture only theoretical or inherent risk) and placed less emphasis on risk factors and size. The General Risk Table now acknowledges that having the potential to impact a large number of users is not necessarily (by itself) a strong indicator of being high risk. Ofcom also added hypothetical examples of how to use the Risk Level Tables (see Table 16 from Appendix B on pages 79-84 here)and cautions that the tables should not be applied mechanically.

Risk Levels

The assignable risk levels are negligible, low, medium or high. Ofcom has clarified its assessment expectations in several areas – for example, service providers should assess risk as it exists on the service at the point of the risk assessment. Ofcom has also clarified how it perceives negligible risk – for example, if the evidence shows that it's not possible or extremely unlikely for the kind of illegal harm to take place on the service, then the risk may be negligible irrespective of whether one or more of the conditions of low/medium/high risk is met. But if there are some relevant risk factors and the kind of illegal harm is possible, Ofcom would normally expect "comprehensive evidence" to demonstrate that the service does not pose a low, medium or high risk in order for the risk level to be assigned as negligible.

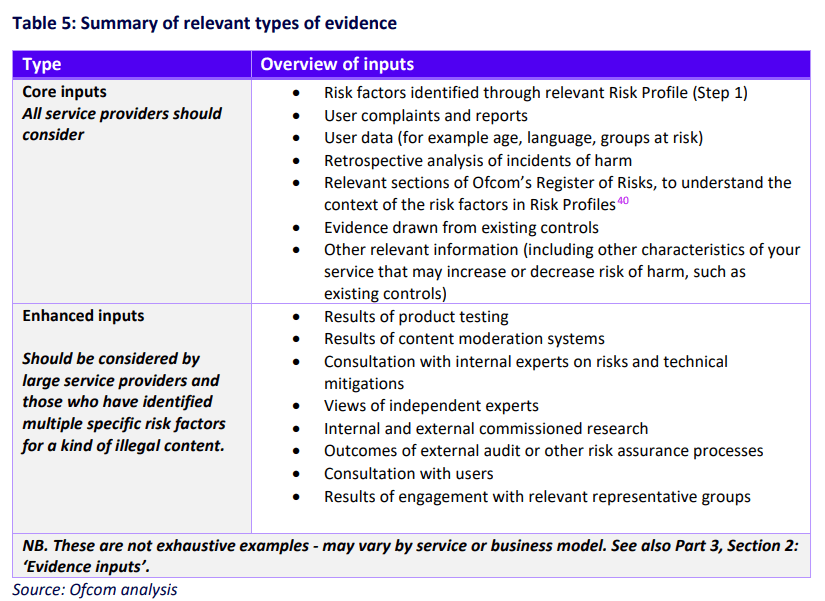

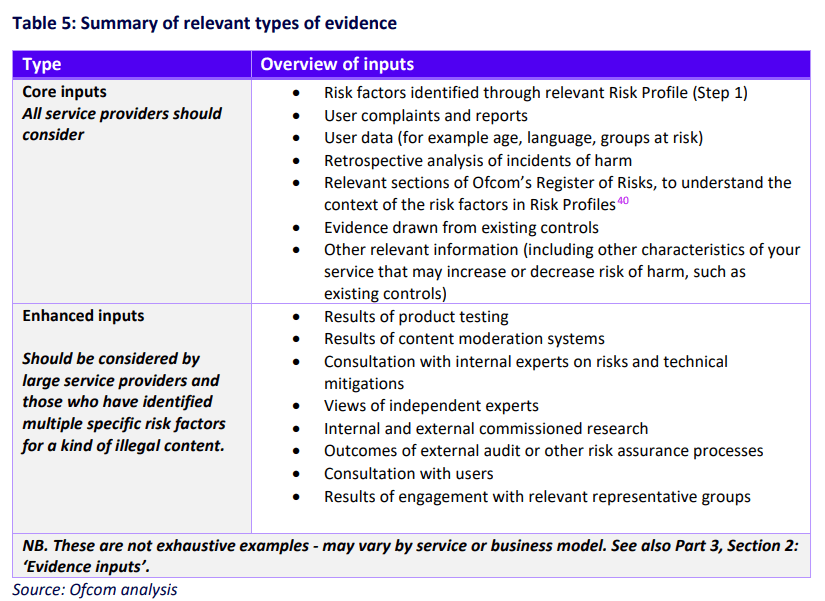

Evidence inputs

Services must still consider core inputs and enhanced inputs as types of evidence, and Ofcom has clarified some of its expectations on this (see Table 5 on page 23 here and below). Ofcom now explicitly says it expects large service providers to use enhanced inputs, in addition to its previously stated expectation for service providers that identified several specific risk factors and providers that are not confident the core inputs gave an accurate assessment to do the same. Evidence of harm on the service remains the key determining factor for assigning a level of risk.

How does this impact you?

Ofcom's changes to the Risk Profiles (and the Risk Tables, as well as its other clarifications) mean that even service providers who already did their risk assessments based on the consultation guidance will now have to revisit and update them accordingly, bearing in mind Ofcom's final guidance. Service providers who have not yet completed their risk assessments should do so. There is not a lot of time left, as the illegal content risk assessments must be completed by 16 March 2025.

Illegal Content Codes of Practice for user-to-user services

This sets out Ofcom's recommended measures for services to comply with their applicable duties, including the illegal content safety duties and duties about content reporting and complaints procedures. Here we look at some of the main changes in the final 2024 statement version compared to the 2023 consultation version, as updated by the amendments made in the May 2024 Protection of Children consultation version.

Keyword detection regarding articles for use in fraud

Following stakeholder feedback, Ofcom deprecated the recommended measure from the 2023 consultation version relating to keyword detection regarding articles for use in fraud (previously referenced as measure 4I), but Ofcom will consult in Spring 2025 to explore a broader and more flexible measure on the use of automated content moderation technologies, including AI.

Reporting and complaints

Ofcom added two new measures in respect of reporting and complaints:

- Large services or services at medium or high risk of any kind of illegal harm should provide for complainants to opt out of receiving any non-ephemeral communications in relation to a relevant complaint (measure ICU D6).

- All services may now disregard manifestly unfounded complaints that are not appeals (measure ICU D13) provided they comply with the recommendation requirements (for example, services need to have a policy setting out the information and attributes that indicate a relevant complaint is manifestly unfounded, as well as a process to monitor how the policy is applied, whether it incorrectly identifies complaints and then to review the policy accordingly).

Content moderation

Ofcom also added a new measure in respect of content moderation, although this is just a division of the originally recommended measure on having a content moderation function. Ofcom has now divided this into two separate measures to account for consultation feedback that some services are configured in a way which means it is not currently technically feasible for them to take down content.

All services should have, as part of their content moderation function, systems and processes designed to review and assess content they have reason to suspect may be illegal (and, when there's reason to suspect that, the provider should review the content and either make an illegal content judgment or consider whether the content is in breach of its terms of service where it's satisfied the terms of service prohibit the type of suspected illegal content).

Other changes to recommended measures

Otherwise, Ofcom has adjusted and clarified almost all of its previously recommended measures in some way, either fine-tuning terminology or applicability. For example (and this is a non-exhaustive list):

- In respect of the written statements of responsibilities (measure ICU A3), those should be for senior managers (as opposed to staff), as Ofcom recognises the individuals concerned may not be employees and they also do not need to be UK-based. The statements should also be about the management of risks having to do with illegal harm as it relates to individuals in the UK.

- In respect of tracking evidence of new and increasing illegal harm (measure ICU A5), Ofcom has made amendments to clarify this only relates to the UK.

- In respect of having a content moderation function that allows for the swift take down of illegal content (measure ICU C2), Ofcom has clarified that it does not expect services to take down content where it is not currently technically feasible for them to do so (but Ofcom will investigate where service providers claim technical infeasibility and providers are still expected to review and assess suspected CSEA or proscribed organisation content).

- In respect of the collection of safety metrics during on-platform testing of content recommender systems (measure ICU E1), Ofcom has replaced the term 'design change' with 'design adjustment' to differentiate between significant changes (which are subject to the risk assessment duty) and less significant adjustments in scope of this measure. Ofcom has further clarified that some content recommender systems are excluded from this measure, namely: product recommender systems used exclusively for the purpose of recommending goods and services; content recommender systems that recommend only content uploaded or shared by a single user; and content recommender systems used exclusively to operate a search functionality to suggest content to users in direct response to search queries.

How does this impact you?

The new Code of Practice is expected to complete its Parliamentary process in time for it to be in force by 17 March 2025, so the clock is ticking. Service providers should consider (or revisit) whether they are on track to comply with Ofcom's updated recommended measures or whether they will decide to comply with their relevant Online Safety Act duties using alternative measures.

Our online safety team is on hand to support and help you navigate through Online Safety Act compliance.