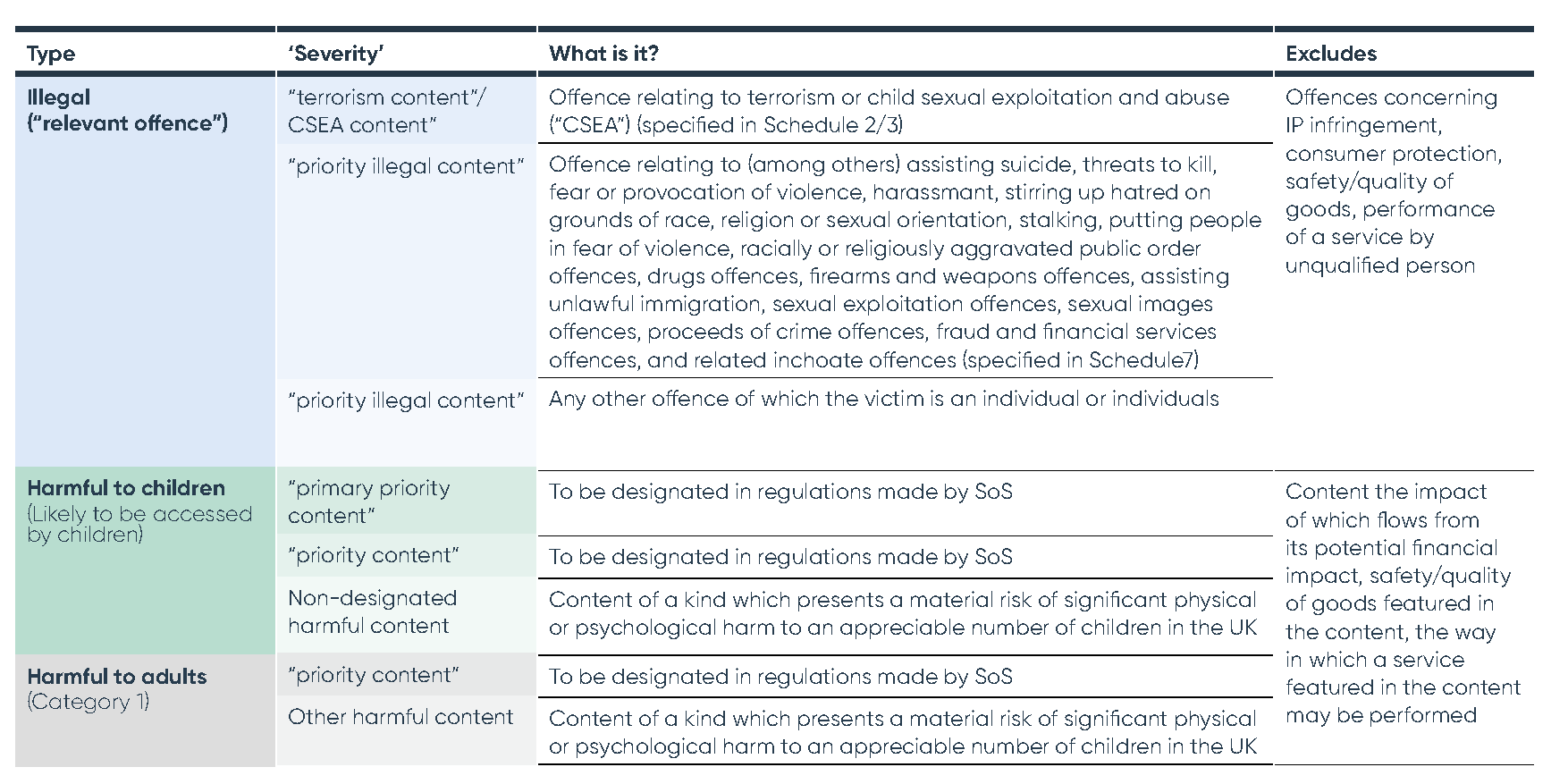

The Online Safety Bill (OSB) imposes obligations on in-scope services regarding three types of content: illegal content, content that is harmful to children, and content that is harmful to adults. It then applies further sub-categorisations within these content types. The "safety duties" that services must comply with vary depending on the content in question.

Illegal and harmful content

Illegal content

All services in scope of the OSB have obligations concerning illegal content. This comprises content which, or the or the possession, viewing, accessing, publication or dissemination of which, amounts to:

- an offence relating to terrorism or child sexual exploitation and abuse (CSEA); these offences are specified in Schedules to the Bill

- any other "priority offence" specified in Schedules to the Bill. The list includes offences in relation to assisting suicide, threats to kill, fear or provocation of violence, harassment, stirring up hatred on grounds of race, religion or sexual orientation, stalking, putting people in fear of violence, racially or religiously aggravated public order offences, drugs offences, firearms and weapons offences, assisting unlawful immigration, sexual exploitation offences, sexual images offences, proceeds of crime offences, fraud and financial services offences, and related inchoate offences, or

- any other offence of which the victim is an individual or individuals except offences relating to the infringement of IP rights, breach of consumer protection laws, the safety or quality of goods, or the performance of a service by a person not qualified to perform it.

Whereas, in the previous draft of the Bill, content was considered illegal if the service provider had reasonable grounds to believe there was a relevant offence, this mental element has now been removed and only content actually amounting to an offence is considered illegal.

Content harmful to children

Services likely to be accessed by children have obligations regarding content that is harmful to children. This comprises:

- Content that amounts to "primary priority content" or "priority content" – content falling within both categories will be designated in regulations to be made by the Secretary of State. The Online Harms Consultation Response indicates that these might include violent and/or pornographic content.

- Content of a kind which presents a material risk of significant harm to an appreciable number of children in the UK except where the impact flows from the content's potential financial impact, the safety or quality of goods featured in the content, or the way in which a service featured in the content may be performed. "Harm" for these purposes includes physical or psychological harm, including harm caused by individuals to themselves and by individuals to other individuals.

Content harmful to adults

Services falling within Category 1 under the Online Safety Bill have obligations concerning content that is harmful to adults (more information regarding Ofcom's role in categorising regulated services is available here). This comprises:

- Content that amounts to "priority content" – content falling within this category will be designated in regulations to be made by the Secretary of State. The Consultation Response indicates that this might include abuse that doesn't amount to an offence, and content about eating disorders, self-harm and suicide.

- Content of a kind which presents a material risk of significant harm to an appreciable number of adults in the UK except where the impact flows from the content's potential financial impact, the safety or quality of goods featured in the content, or the way in which a service featured in the content may be performed. Again, "harm" for these purposes includes physical or psychological harm, including harm caused by individuals to themselves and by individuals to other individuals.

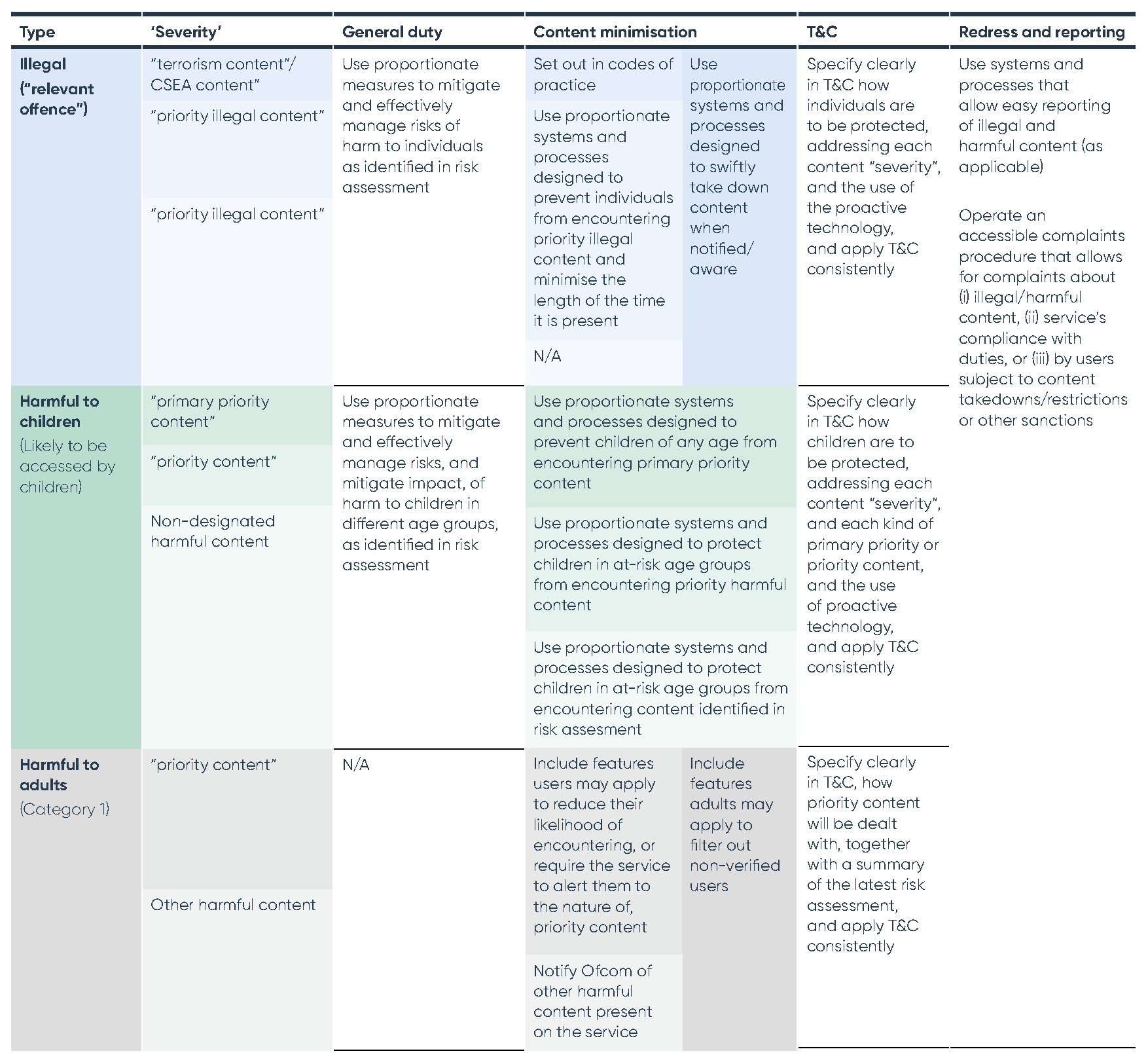

Safety duties

Different safety duties apply depending on the category of content in question. Here, we focus on the duties that apply under the Online Safety Bill to user-to-user services; the duties that apply to search engines are similar but not identical. The following sections set out the safety duties as provided in the OSB, followed by observations as to how services may be expected to comply with them.

The safety duties are closely tied to the outcome of the risk assessments that services must undertake under the Bill (more information regarding risk assessments is available here).

Illegal content

All services in-scope of the Online Safety Bill must comply with the following duties:

- use proportionate measures to effectively mitigate and manage the risks of harm to individuals as identified in the illegal content risk assessment

- use proportionate systems and processes designed to prevent individuals from encountering priority illegal content by means of the service and minimise the length of time it is present

- use proportionate systems and processes designed to swiftly take down illegal content when notified or otherwise aware of it, and

- specify clearly in terms and conditions how individuals are to be protected from illegal content, addressing terrorism and CSEA content, priority illegal content, and other illegal content separately, and the use of proactive technology, and apply terms and conditions consistently

- report all detected CSEA content present on the service to the National Crime Agency.

Content harmful to children

Services likely to be accessed by children must comply with the following duties regarding those parts of the service that it is possible for children to access:

- use proportionate measures to effectively mitigate and manage the risks of harm, and the impact of harm, to children in different age groups as identified in the children's risk assessment

- use proportionate systems and processes designed to prevent children of any age from encountering primary priority content – the bill refers to using age verification or other age assurance measures as examples

- use proportionate systems and processes designed to protect children in age groups assessed to be at risk from the relevant content from encountering priority and any non-designated content harmful to children – again, the OSB refers to age assurance as an example

- specify clearly in terms and conditions how children are to be prevented or protected from encountering harmful content, addressing each kind of primary priority and priority content separately (eg violent content and pornographic content would need to be individually addressed if they fall within these categories), and the use of proactive technology, and apply terms and conditions consistently.

Content harmful to adults

For Category 1 services that have obligations concerning content harmful to adults, there is no general duty to mitigate risk of harm and no specific content minimisation duties. However, these services do need to:

- have and consistently apply terms and conditions that specify how each type of priority content will be dealt with by the service. The options include taking down the content, restricting users' access to the content, and limiting the recommendation or promotion of the content

- summarise in the terms of service the findings of the most recent adults' risk assessment

- notify Ofcom on becoming aware of any non-designated content that is harmful to adults present on the service, including the kinds of content identified and their incidence

- where proportionate, include features that adult users may apply to increase their control over harmful content by reducing their likelihood of encountering priority content or requiring the service to alert them to the harmful nature of priority content

- include features that adult users may apply to filter out non-verified users but preventing interactions from non-verified users and reducing the likelihood of encountering content posted by non-verified users.

These duties reflects the idea that adults should be empowered to keep themselves safe online.

All illegal and harmful content – reporting and redress

All services have obligations to operate using systems and processes that allow users and other affected persons to report illegal and harmful content. They are also required to operate accessible complaints procedures, including to allow complaints by users whose content has been taken down or restricted, or have suffered other sanctions as a result of their content.

Fraudulent paid-for advertising

While outside the scope of this article, it's also worth mentioning at this point that the 2022 version of the OSB introduces a new legal duty which requires the largest social media platforms and search engines to take steps to prevent fraudulent paid-for advertising from appearing on their services. See here for more.

How to comply – current government guidance and future codes of practice

The OSB provides that, in order to fulfil safety duties, services will need to take measures (if proportionate) in areas including regulatory compliance and risk management arrangements, the design of functionalities, algorithms and features, policies on terms of use and user access, content moderation, functionalities allowing users to control the content they encounter, user support measures, and staff policies and practices, among others. Proportionality is to be assessed considering the findings of the service's risk assessments and the size and capacity of the service.

Aside from this fairly limited guidance in the Bill itself, how might services expected to comply and what steps can be taken now to prepare?

Codes of Practice

The OSB provides that Ofcom must publish Codes of Practice describing recommended steps for compliance with duties. Services will be treated as having complied with their obligations under the OSB if they take the steps described in a Code of Practice.

Following the Codes of Practice will not be the only way for services to comply with their duties but may (depending on their contents) be the easiest, or at least the most certain, way to ensure compliance. In drafting the codes of practice, Ofcom will need to consult representatives of regulated service providers, users, experts and interest groups – there will therefore be an opportunity for affected stakeholders to make their views known before these important documents are finalised.

In the meantime, the government has published voluntary interim codes of practice for terrorism and CSEA content. The examples of good practice outlined in these Codes set a fairly high bar in terms of content minimisation measures (eg suggesting that content be identified using in-house or third-party automated tools in conjunction with human moderation) and also contain more aspirational obligations (eg regarding industry cooperation). This might reflect the seriousness of these particular categories of content and/or the voluntary nature of the Codes of Practice but are a useful guide to best practice for services preparing for compliance.

Current government guidance

The government has also published several guidance documents regarding online safety as a general matter. These are not linked to the OSB but may be indicative of the types of measures services may be expected to take under it. The guidance is clear that the preference is for safety by design – ie services should build features and functionality that promote user safety and prevent the dissemination of illegal and harmful content rather than merely taking down content when aware of it. Measures suggested by the guidance include:

- requiring users to verify their accounts

- using age assurance technology to verify user age

- defaulting to the highest available safety settings (eg having content shared by users visible only to the user's friends with location turned off)

- limiting functionality for children or preventing children from departing from default high safety settings

- making reporting functionality available in different locations (eg in private messaging functionality as well as when viewing public posts) and prompting users to make a report when suspicious activity is detected

- using automated safety technology to identify illegal and harmful content, supported by human moderation

-

- prompting users to review their settings

- labelling content that has been fact-checked by an official or trustworthy source, and

- not allowing algorithms to recommend harmful content.

The guidance also includes a specific 'one stop shop' for businesses for child online safety.

Next steps

There are a number of areas where further legislation or guidance is required in order for services to more fully understand their obligations in relation to illegal and harmful content under the Online Safety Bill. These include regulations to be made by the Secretary of State setting out "priority" categories of content harmful to children and adults and, importantly, Ofcom's Codes of Practice, which will operate as a form of 'safe harbour' for compliance. Services wishing to prepare for and engage with the Online Safety legislation in the meantime can take note of existing government guidance and participate (potentially via an industry group) in Ofcom's consultation (when it is published) regarding the Codes of Practice.

Find out more

To discuss any of the issues raised in this article, please reach out to a member of our Technology, Media & Communications team.